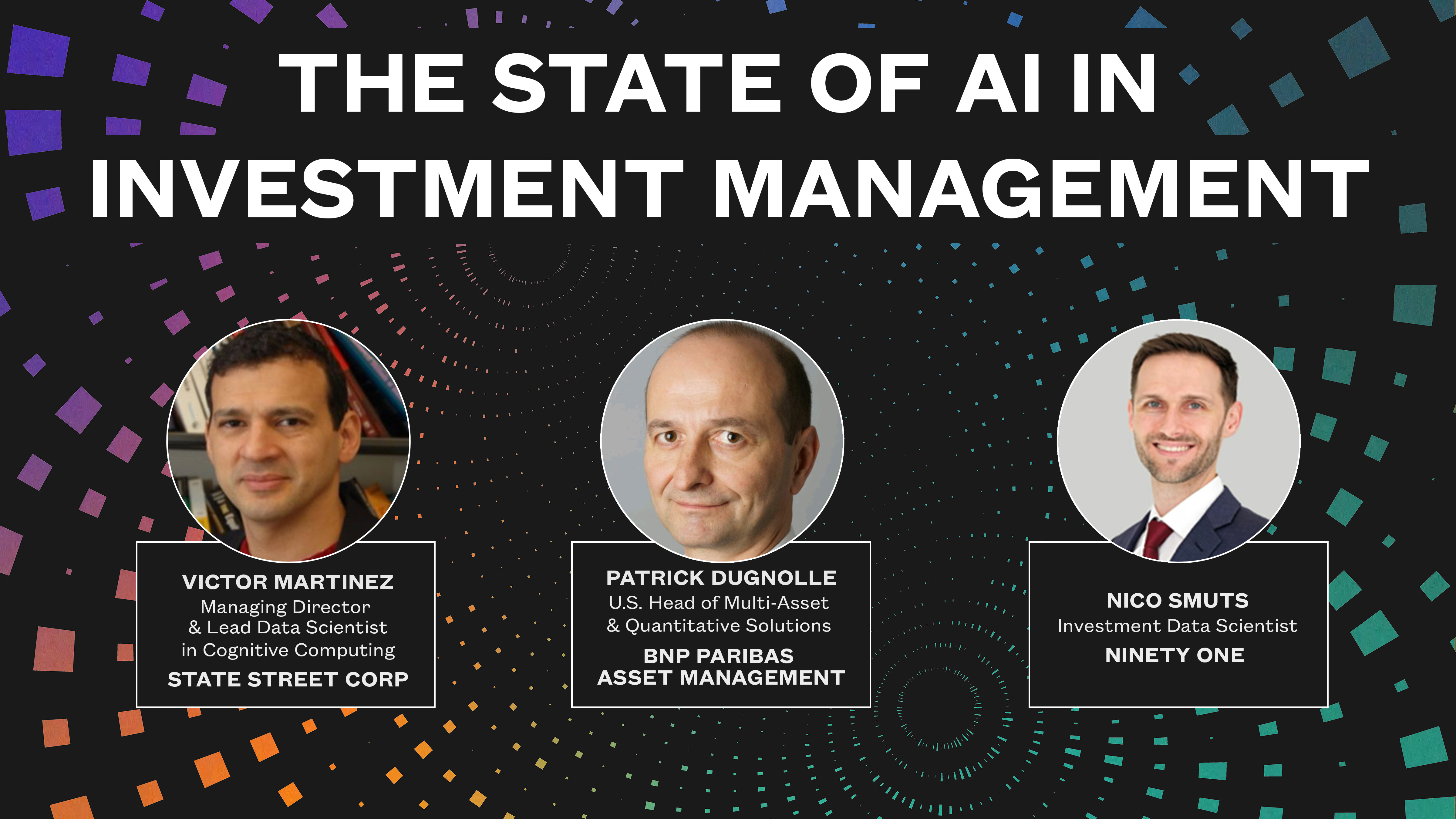

Lead Data Scientist

State Street

Overview

In this presentation, Victor Martinez talks about the complexities of properly applying machine learning algorithms to today’s financial markets.

Primarily focusing on time series implementation and analysis, he differentiates between stationary and non-stationary relationships to describe the difficulty in creating reliable and flexible models. Financial markets are fluid and constantly changing, a factor that is difficult to capture in models that typically predict static trends from historical data.

Machine learning is known to be able to take complex structures of data and find underlying trends that may seem minute at first. Financial markets already function at a high efficiency due to the nature of competition in tandem with supply and demand. It may seem that with efficient markets there is little hope to improve and find areas to exploit. However, if the patterns can be understood of how a market from a certain state becomes more efficient, predictions can be found to make future profits. As you can imagine, encompassing all of the variables that comprise financial markets to make a single output is a complicated task.

Fortunately, it is one that machine learning excels at through neural networks. Part of how a neural network functions is by condensing information into a ‘kernel’. As the below picture depicts, several different factors can be iteratively reduced into smaller data bits that can then be used to generate individual (or a much smaller pool of) predictions.

It is easy to point to areas where machine learning has been successful in this data and feature reduction. The layman can see this where it impacts their everyday lives. Image processing that has led to self-driving cars, language processing that has led to text-to-speech functionality, and audio processing that has led to voice recognition are all successful examples of this. These popular examples all have a common denominator. They all are represented by stationary variables. Stationary variables are relationships between data points that remain the same. For example, in image processing when a person’s face is analyzed the data points between different features of the face are going to hold the same relationship to one another regardless of the perspective that the image is shown. Even as a person ages the relationship between data points will only marginally change.

Stationary variable prediction can be valuable for financial market predictions, as Victor mentions. This can often be represented in time series, which take historical and chronological data points that are compared to actual results and then forecast future data points. However, non-stationary variables are a much more accurate representation of financial markets as they can aggregate multivariate time series to match constantly evolving markets.

“Using fractal structures, structures built on structures, we can reduce complex patterns from multiple variables to basic elements to reveal otherwise hidden patterns.”

Non-stationary variables are situations where the features that determine an outcome are constantly changing with respect to one another. Victor aptly describes this concept like a school of fish. The general shape of the school stays the same but at any given second one fishes location within the school and spacing to other fish is changing. In financial terms this can be thought of as the composition of different assets that make up a portfolio. As a portfolio moves through time, the assets of that portfolio are going to change and in changing are going to affect other assets in different ways. Additionally, not all of this change will have a correlation where noise within the data that does not contribute to trend changes presents an additional challenge for modeling purposes. With complications coming from having to filter out noise and changing variable coefficients there can be a high risk for inference problems within the model and from those building it.

Neural networks can again aid in this issue by being able to run multivariate systems through recurring networks. Once a target is identified and a consistent reward function can be applied to an iterative process of reinforced learning within a model, machine learning algorithms can use a sort of intelligent inference based on statistical methods run within the hidden layers between their input and output. The process helps track the movements within each of the elements of the model. Successful tracking of these movements helps identify key features for past trends and can predict how future features will impact future trends.

BACK

BACK