BACK

BACK

“Ask Me Anything” with Zappos’s Head of AI/ML Research & Platforms, Ameen Kazerouni

By Ai4March 19, 2020

Head of AI/ML Research & Platforms

Zappos Family of Companies

Ai4 recently hosted an "Ask Me Anything" session with one of our speakers, Ameen Kazerouni, on our Ai4 Slack Channel. Read the full transcription below...

MODERATOR: Hello everyone! It’s a pleasure to welcome our first-ever AMA guest Ameen Kazerouni. Ameen is the Head of AI/ML Research & Platforms for Zappos. You now have one hour to ask him anything. Ready, set... GO!

AMEEN: Hello! Thanks for having me. Looking forward to answering some questions and excited to have some fun!

PARTICIPANT 1: Hey Ameen - can you share with us 1-2 of the biggest challenges you've faced with setting your team/environment to be a well-oiled machine in order to successfully deliver business outcomes?

AMEEN: Thanks for the question! I would say the 2 primary challenges were...

- Identifying the right business problem. I.e. not chasing after the fanciest ML/AI technique, but truly identifying an area that we could get a solution into production, in an MVP state, and scale to having a tangible bottom line impact.

- The second challenge is getting buy in. AI style solutions require sizeable technical investment and specialized recruiting. I helped start the ML org at zappos, so I know that being able to get the funds allocated to kick it all off required some strong story telling

PARTICIPANT 1: Thanks Ameen! This tracks, as we've received the same type of feedback from our conversations w organizations that have heavy R&D investments into ramping up their AI programs.

PARTICIPANT 2: I can imagine all of the normal business uses of AI for Zappos but where might the AI be used that isn't really obvious?

AMEEN: So we use AI and ML in the places you'd expect it. Our internal search ranking, personalization, marketing, inventory management etc.A cool area where we've seen a lot of gains is an ML driven sizing prediction model. we were able to drastically cut dow our sizing related return rate without compromising the customer experience. its a great example of Ai being a win for the org and a win for the customer experience at the same time.

PARTICIPANT 3: Would you mean trying to see if shoe sizes or small/med/large is related to return rate that's high? which algorithm did you guys use?

AMEEN: Yes, that is what we ended up building. It actually predicts your exact size rather than a recommendation on going up or down. Its not an out of the box algorithm, its something we had to do in house since this turned into a relatively complex problem to solve.Its a combination of K-Means, followed by collaborative filtering which contributes to a broader features space that feeds into a classifier that makes live inferences for the customer on the website. Classifier was initially a light GBM which was recently transitioned to a ANN.

PARTICIPANT 4: Hi Ameen - Thanks for your time!

Given the drastic change in retail consumer behavior recently due to COVID-19, what steps are the AI/ML team taking to help the business weather the storm?

AMEEN: The primary concern is the health and safety of the employees at Zappos. Enabling people to WFH has been a top priorityFrom the AI/ML specific perspective we're doing our best to model out impact on the business which we are sharing on a live bases with leadership. Our forecasting team is the one primarily engaging on this front.

PARTICIPANT 5: This probably gets asked all the time but what technical skills/personality traits do you look for when building a team? Do you have a mix of people from many backgrounds or do they all tend to come from the same areas?

AMEEN: It really depends on the point in the journey you're at in the building of your org. I would definitely advise against immediately trying to build out a team of PhDs and sticking them on research problems. I would focus on specifically hiring for achievable tasks and building out from there.You will often find that you can "upskill" existing tech talent internal to your org to tackle initial use cases to build out your POCs.

Data Analysts

Statisticians

Software Engineers

Deep learning specialist

Machine Learning Engineers is probably the order in which the team expanded for us. but that can change entirely depending on your situation

PARTICIPANT 6: What is the most compelling thing to tell investors so they will fund you?

AMEEN: Not of lot of experience here, but from the perspective of securing internal funding a piece of advise that has not led us astray.Don't go looking for problems for a fancy new algorithm your ML team read about. Instead, focus on find the simplest ML/AI solution for existing problems investors care about. The funding usually follows.

PARTICIPANT 7: Hey Ameen, thanks for doing this! What skills/pathways would you recommend anyone getting into AI/ML who has some CompSci background?

AMEEN: The trait that I really look for while recruiting is the ability to think outside the box. A lot of the skills can either be augmented with internal training if you have the ability to identify which portions of data hold the solution to a particular problem. Of course familiarity with statistics, probability, and some kind of DS friendly programming doesn't hurt. [python/r/scala]

PARTICIPANT 8: Hi Ameen! Are you using AI with self-service call center contacts such as chatbot? What about with Cloud based IVR and speech? What trends to share...

AMEEN: We are exploring leveraging forecasting to make sure the call center can be better staffed. Zappos prides itself in a fantastic call center experience and out customer loyalty team forms the backbone of a customer experience. no plans to automate away our secret weapon. We do, however, use AL and ML to assist the call center employees to find products faster and to help answer questions faster by leveraging the same models we use to optimize the on site experience.

PARTICIPANT 8: The call center experience is legendary indeed! We are starting to use AI to mine call recordings for frequent words/comments to then update staff to help answer questions more accurately.

PARTICIPANT 9: I have a few additional questions. Feel free to answer as little/many, but:

- How can a company ensure its AI investment yields results?

- How can a company ensure its deployment timeline is as short as possible?

- Which roles within Zappos are involved in AI/ML?

AMEEN: These are some tough questions.

- I think you should think of it as any other project. there are a lot of low hanging fruit that can be optimized for with Ai/ML and I'd focus on chasing after those first. seeing clear improvement in existing KPIs and measurable results in A/B tests. The mistake is when you treat ML as something special, where you don't see a return and thats okay, because everyone should be doing ML. I personally believe thats a recipe for disaster. It's either a research project that you are betting on, or it works in a production setting, or it fails and you can learn and iterate on that failure.

- This can be a whole other "AMA" within itself. Zappos has a pretty robust platform for AI/ML that we've developed over the last few years. after shoring up some wins and getting more funding.

- The Machine Intelligence org that i lead has, data analysts, forecasting specialists, deep learning specialist, software engineers, ml engineers, and cloud architects. This happened over time - no need for it at kick off.

PARTICIPANT 10: What would you say is the main difference between an AI Platform within an enterprise and a traditional software development platform?

AMEEN: In my opinion, you should try and minimize the differences. From an operational excellence perspective you should stick to existing deployment protocols, security standards, and team SLAs. That being said, there will be some fundamental differences in portions of the stack. Your ML automation framework will need to deal with a lot of long running jobs that you traditionally don't deal with. Your event ingestion framework will need to deal with high throughput data your API layer will need access to up to date feature libraries to be able to make live inferences. As long as you address each one of those use cases keeping the established protocols for operational excellence in mind, you should emerge with a robust stack that can meet your needs.

PARTICIPANT 11: Pandas dataframe and its pandas api based ML packages such as Scikit-Learn are widely used today as de-facto goto tools. But Pandas usually cannot handle very large datasets and that's when something like Spark dataframe & PySpark would come in but syntax is very different for its ML packages. I am sure datasets are very large at Zappos. At work, how do you handle issues like that esp if source datasets are sitting on hdfs? (things I can think of are rewrite everything in PySpark MLlib, use Pandas UDF).. in particular, how do you productionize your ml work - which technologies, syntax, ml packages etc.

AMEEN: Great question! We've had to get pretty inventive on how we tackle the issue of getting solutions into production. Our workflow usually allows for the ML scientist to use whatever language they are comfortable with during the research phases. This allows them to be as comfortable as they can be during the toughest part of the job, which is finding the signal in the noise. we often find that sampling data during this phase and working with subsets of data can get you on the right track before solving the scale issue. When we do need to solve a problem at scale we take one of two approaches.

- Custom software developed by our ML engineers, running on large machines. This can be a single node machine with highly parallelized code.

- We leverage a sizable emr cluster that has access to our datalake where we work in spark. we usually stick to java or scala spark here since the deployment process plugs into our existing frameworks very cleanly.

The cluster it self access the data in S3, which our dag orchestrator unloads prior to the job kicking off. The features engineering, data unload and modeling steps are all decoupled so they can scale independently and are stitched together using an in hour orchestrator. Another asset has been leveraging contract first protocols such as avor/parquet/protocol buffers. Protbufs compile into multiple languages including java/scala/python/r which allows us to stay language angnostic. we're able to use base64 encoded protobufs as a intermediary layer between steps. this is particularly useful when deploying precomputed inferences into dynamo for fast access.

Another thought that comes to mind here is leveraging micorservice APIs. We often break down an ML solution into smaller components. can we pre-compute portions and deploy them in dynamo so we are not making decisions at run time and only do live inference for pieces of the solution that require data that is only available in real time. Going back to sizing as an example, 95% of the computation can be done ahead of time leveraging our EMR cluster, leveraging protobufs to remain language agnostic. so we leverage that. and only make the final piece of the inference in real time.

PARTICIPANT 11: Would that be something like reading dataset via pandas by chunk, and creating a for loop to do modeling?

AMEEN: That data here is too large to work with in python. or at least thats what we found. Even if you find a way to use pandas you'll run into issues with the GIL and find yourself either out of memory, or not leveraging all your CPU. We prototyped in python and then translated into java spark for this one. There are ways to stick to python and get around these issues. however we had the engineering talent on hand to migrate so we went that route instead.

PARTICIPANT 11: So before getting to engineers, data scientist would test out via pandas and its pandas ml packages? Or would they would they code in Scala or Java spark? (I see that it might be more efficient with the later.. FYI I am more on the stats side and not as much familiar with tech /cs)

AMEEN: Depends on how confident you are on the project. We don't try and translate to java/scala spark unless required. it can get pretty expensive. Often we will use python but not existing ml packages and do some native python work, which can actually be really fast and deal with sizable amounts of data with a beefy machine.

PARTICIPANT 12: What do you think about Explainability in AI and its need for business use cases. Are there any use cases at Zappos that you have come across where explainability would be helpful?

AMEEN: Love it! I think explainability is not discussed enough. It is critical to keep in mind while developing solutions. Telling leadership 'sales are going to be down' followed by saying "I don't know why" is never useful. So depending on the use case i would strongly encourage compromising on the accuracy of a model to use a technique that offers explainable solutions. Sizing predictions for customers, I believe is okay to be a black box. Inventory forecasts, you probably want to surface actionable and explainable levers to your consumers.

PARTICIPANT 13: Which AI tools / platforms that you’ve adopted recently have you found made a big impact?

AMEEN: One of our principal engineers has developed a method to automate the endpoint creation for new precomputed ML models. That has proven to be tremendously useful. We are able to iterate very fast with this new development.in terms of external vendors. we recently started with cometml which is a framework for tracking hyperparameters and model training cycles. This has been extremely useful in identifying areas of opportunity for improving various models.

PARTICIPANT 14: How much of Deep Learning is used in production at Zappos? What are the challenges which you have faced in moving to deep learning from traditional statistical models?

AMEEN: We honestly try and minimize the use of deep learning where possible. It's expensive and it sometime encourages new to the field data scientists to take a kind of random make the network larger approach to problem solving. I much rather we exhaust other more explainable cheaper to train options rather than jumping straight to deep learning. That being said there are places where deep learning is without a doubt the right choice. we use to extract image embeddings from product imagery to contribute to other models and feature spaces across the ecosystem. We also use deep learning to extract phrase embeddings to make natural language and semantic improvements to our search algorithms. The biggest challenges

- Tough to hire for

- Shortage of talent

- Expensive to train

- Long training times can still end in a expensive failure

PARTICIPANT 14: For 4: Doesn’t transfer learning make it quite fast to train from publicly available pre-trained models?

AMEEN: You'll find that you actually run into a lot of licensing issues when trying to use publicly available models or datasets. When possible though yes it can provide substantial speedups. without a doubt!

PARTICIPANT 15: Various companies at the Ai4 Retail conference last year talked about using computer vision for search. Are you incorporating some of these techniques to help customers find similar products?

AMEEN: I remember a few of those presentations! WalMart labs had a really cool talk that year on how its leveraging computer vision. We do have a few use cases where we leverage computer vision. when it comes to directly being customer facing we of course leverage autoencoders or models like triplet loss to help with similarity look ups. The real value however, I believe, lies in leveraging that rich embedded space to maintain a rich and diverse feature space that is not dependent on a lot of historical data. It allows you to leverage product imagery to be able to power customer personalization and to introduce abstract concepts into your search index.

MODERATOR: To learn a bit about the human behind the AI brain…

- What's your favorite ice cream flavor?

- Favorite place you’ve traveled?

- Your first paying job?

AMEEN:

- I tell people dulce de leche Haagen Daz, its really Phish Food.

- Spent a week in Maui last year that was fantastic. It was the location coupled with how much I needed the break.

- An acting gig in a local production in Bombay, India.

MODERATOR: And that’s a wrap! Thank you, Ameen, for participating. Do you have any final plugs or messages you’d like to put in this channel before signing off?

AMEEN: Thank you for having me! this was an absolute pleasure, super engaged crowd and some great questions. Looking forward to seeing you all at Ai4. Stay safe and stay healthy and try and stay home in these tough times.

Recent Posts

Developing Computer Vision Applications in Data Scarce Environments

Introduction In today’s digital era, computer vision stands as a transformative technology, driving innovations across...

By Sumedh DatarDecember 12, 2023

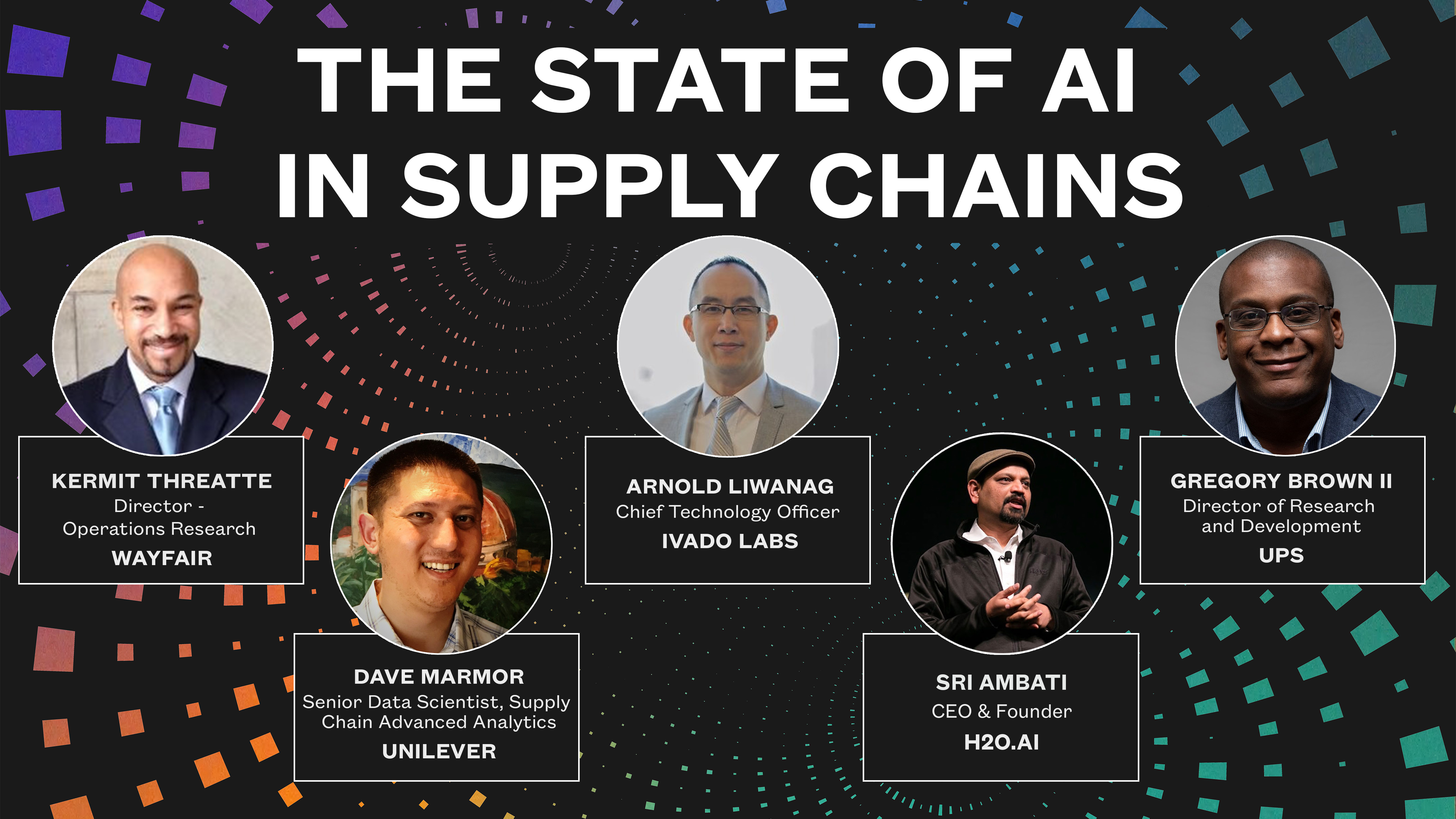

Bottlenecks in Supply Chains & How AI Can Help

During this panel, industry experts (showed above) discussed the impact of COVID-19 on AI on...

By Ai4May 05, 2020

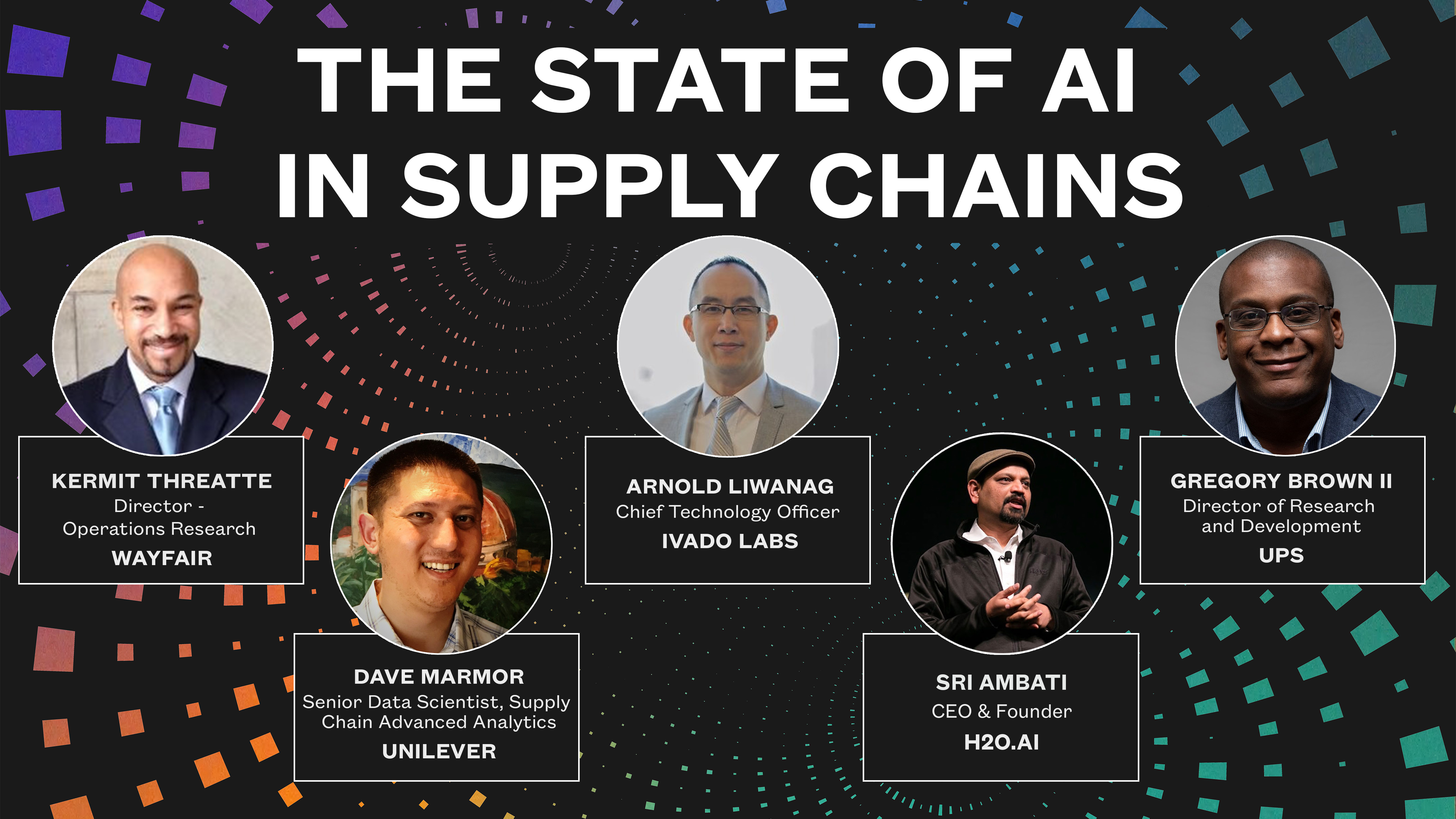

How COVID-19 is Impacting the State of AI in Supply Chains

During this panel, industry experts (showed above) discussed the impact of COVID-19 on AI on...

By Ai4May 04, 2020