BACK

BACK

Head of AI Policy

H&M

Introduction

Linda leads the work on sustainable and ethical AI at H&M, including delving into such questions as “what does ‘responsible AI’ mean for a retail company?” and “what is the connection between AI and sustainability?” Also: “how do we get the conversation started on digital ethics?”

Linda Leopold biography

Linda joined the advanced analytics & AI function at H&M Group in 2018. This was after many years in the media industry and working as an innovation strategist at the intersection of fashion and technology.

As a published author and former editor-in-chief of the fashion magazine Bon, Linda brings a wealth of experience in merging fashion and art with science and technology. Linda has written two non-fiction books and has studied journalism and project management at Stockholm University.

Initial AI ethical considerations in retail

AI development is a constant flow of amazement, hope and fear. Like all technology, AI can be used for good and for bad. The same algorithm can be used to improve healthcare or to produce fake-news videos. Today’s AI can be an enormous benefit to society and also a threat to democracy, depending on how it is used.

Who should we blame when things go wrong, and who should get credit when things succeed? Is it the creator of the algorithm, the user, the trainer, or the AI model itself?

The tricky thing about machine learning is that, even with the best intentions by the creators, and even if you want to do good, things can still go terribly wrong.

Example: chatbots becoming racist or image recognition systems trained on unrepresentative data. None of these were meant to cause any harm, and yet they did.

Every industry faces different AI challenges. Retail AI challenges may seem trivial compared to, say, healthcare AI challenges. The simple answer is that we care about our customers and the world around us.

Bottom line: we have created a very powerful technology that is still very immature. We haven’t really learned how to control it yet.

Consequences of mishandling and misunderstanding ethics

Trust in business is at an all-time low today. The risk of not monitoring technology for unintended consequences can lead to public embarrassment and scandals. This can harm people’s privacy, health or lives, and can have an adverse effect on business in terms of reputation and shareholder value.

The benefits of taking a clear stand can differentiate your company from the competition and result in higher customer satisfaction.

Problem: technology is developing faster than legislation. It’s not enough just to follow the law. AI opens up so many new possibilities, but just because we can do something doesn’t mean that we should do it. The discussion has to go from “are we compliant?” to “are we doing the right thing?”

H&M’s AI department covers the entire value change, from design to customer experience.

AI is being used to:

- better forecast trends and predict demand.

- create the right assortment for different stores.

- optimize prices during sales, both online and offline.

- Give customers more relevant recommendations and offers.

H&M group sustainability goals

- 100 percent leading the change

- 100 percent circular and renewable

- 100 percent fair and equal

Sustainability is core to the company’s identity. Globally, H&M has 270 people working with sustainability, which makes them one of the largest sustainability organizations in the retail industry.

Problem: the H&M sustainability department, like many others, was created in an analog world. Its focus was mainly on the environment and working conditions in the supply chain.

How do you transfer these policies to the AI era? Use AI to reach the company’s sustainability goals:

Do good:

- Create 100 percent recycled or sustainability-sourced materials by 2030.

- Create a climate-positive value chain by 2040.

Do no harm:

- Work with AI in a responsible way.

With the help of AI, aligning supply and demand can be much sharper. This can lead to less transport and warehousing.

Solution: create a checklist for responsible AI. Make sure your goals are:

- Focused

- Beneficial

- Fair

- Transparent

- Governed

- Collaborative

- Reliable

- Respecting privacy

- Secure

Your checklist should cover such issues such as:

- Algorithm bias

- Diversity in our AI teams

- Technical questions involving security

Pose your questions in an open-ended way; the goal is more about starting a conversation and less about ticking boxes.

Example of how to create an open-ended question:

- If the question is: does the use case have a negative effect on vulnerable groups?

The open-ended question becomes:

Have you discussed how the use case affects vulnerable groups?

Bottom line: open-ended questions force you to think in another way.

An example of how to keep an AI ethics conversation from becoming too “abstract”

- Create an “ethical AI debate club,” inviting people from different parts of the company to meet up for a debate.

- Write fictional ethical dilemmas that could take place in your industry today or in the near future. People have to argue a pre-assigned standpoint. That may not be their actual opinion, but that’s part of the exercise. It forces you to find arguments even if you don’t personally agree with them.

- Allow for four-to-seven minutes of pro-and-con arguments, and then the audience votes. Note: there is usually no winning standpoint, which shows how difficult these questions can be.

The goal is to dive deeper into the topics and challenge your initial gut feeling -- and in many cases, to tackle conflicting values.

- For example, choosing between social and environmental sustainability. Discussions like these give you the opportunity to really think through your values. We can sharpen our standpoint and avoid making mistakes in the real world.

Company vs. customer

Many of these questions don’t necessarily involve the company but the customer.

- Example: do you buy local or global?

AI development is affecting us in many of our different roles, both as business decision makers and as customers and citizens.

Bottom line: AI is not a force of its own. We have a shared responsibility in getting AI right. We need to work together to involve more people in the discussion of AI and ethics. If responsible AI is the question of our time, we also have to make it the conversation of our time.

Recent Posts

Developing Computer Vision Applications in Data Scarce Environments

Introduction In today’s digital era, computer vision stands as a transformative technology, driving innovations across...

By Sumedh DatarDecember 12, 2023

Bottlenecks in Supply Chains & How AI Can Help

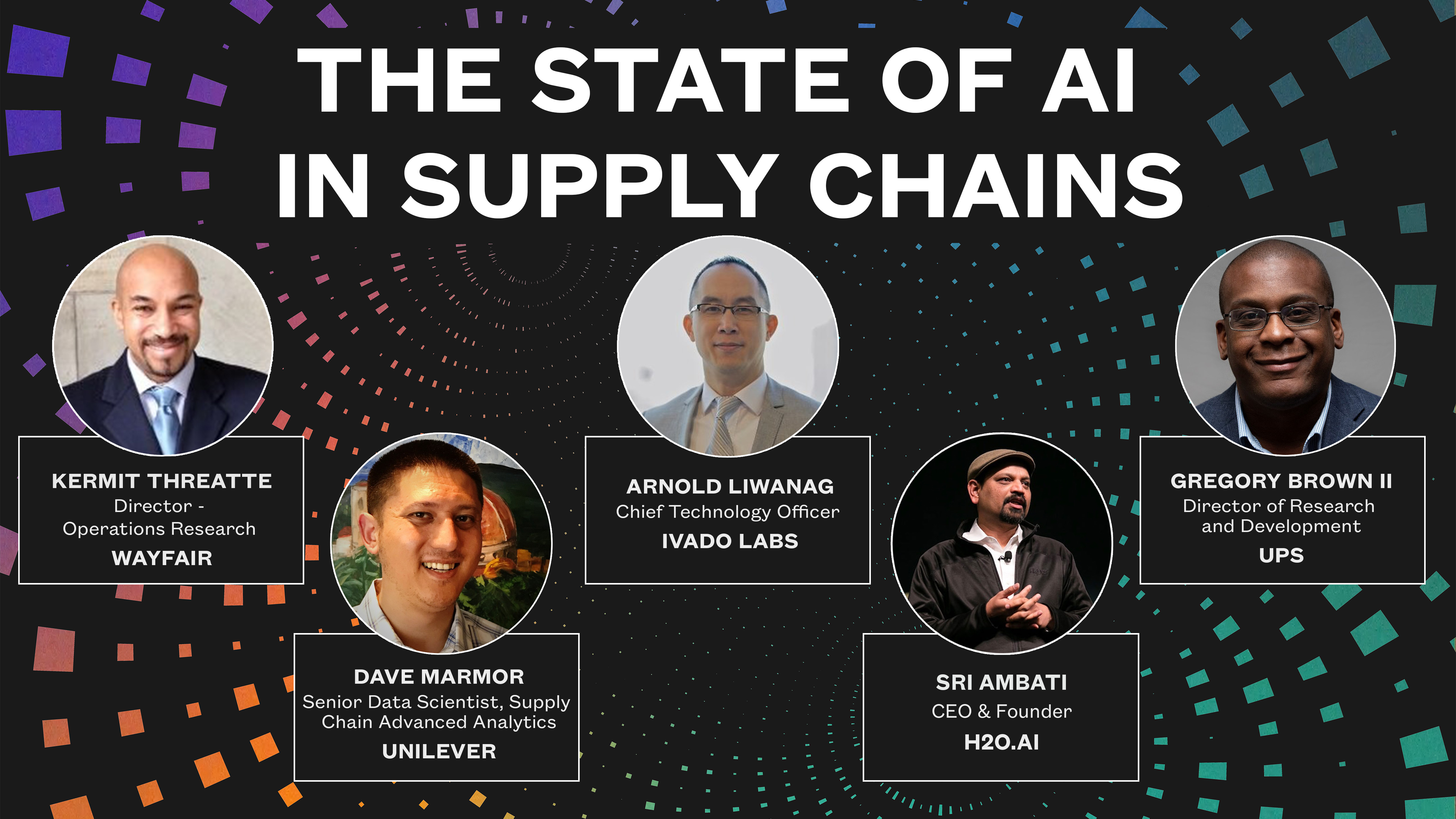

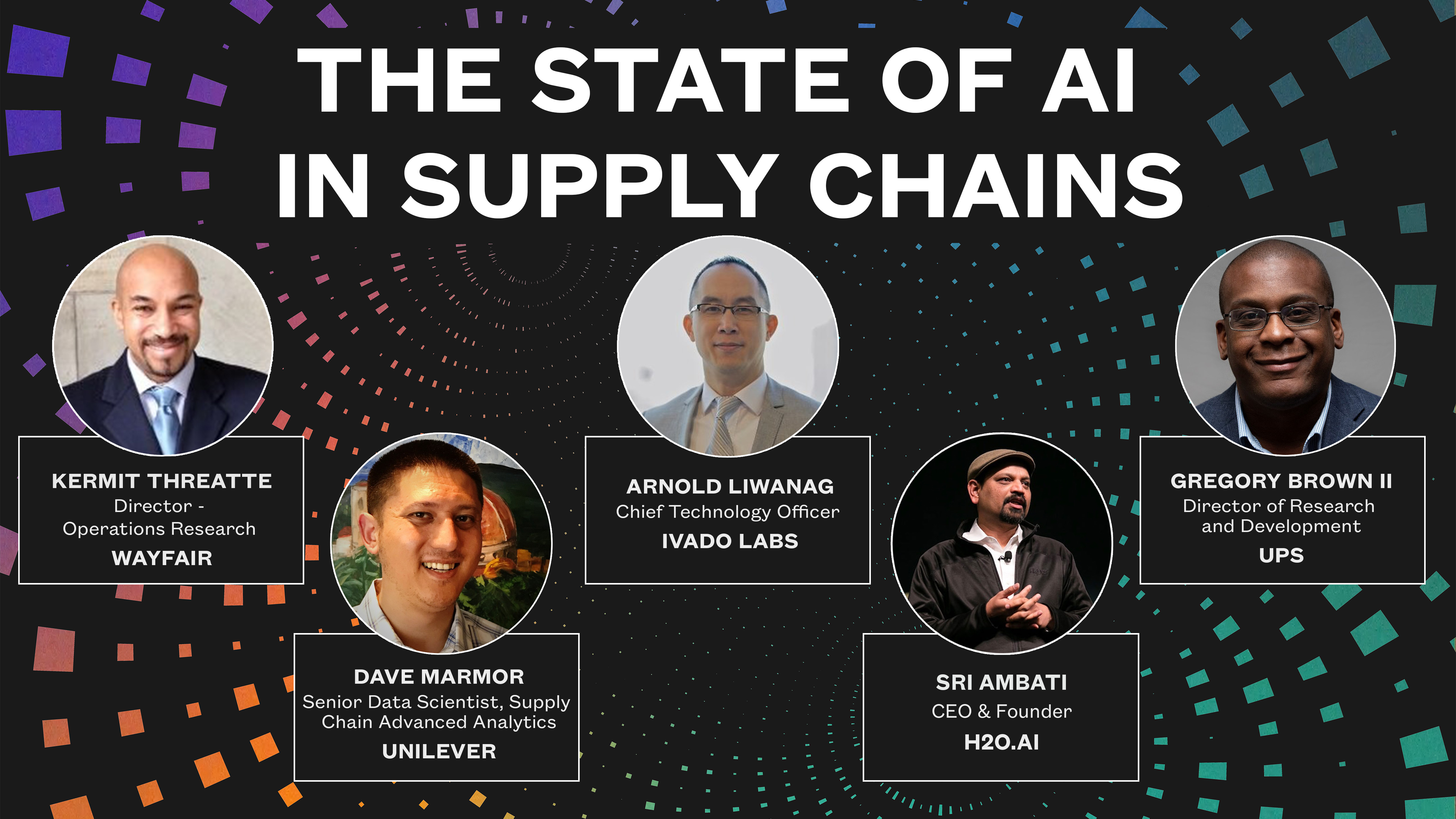

During this panel, industry experts (showed above) discussed the impact of COVID-19 on AI on...

By Ai4May 05, 2020

How COVID-19 is Impacting the State of AI in Supply Chains

During this panel, industry experts (showed above) discussed the impact of COVID-19 on AI on...

By Ai4May 04, 2020